Defaulting to user installation because normal site-packages is not writeable

Requirement already satisfied: pymongo in /cloud/python/lib/python3.8/site-packages (4.6.1)

Requirement already satisfied: dnspython<3.0.0,>=1.16.0 in /cloud/python/lib/python3.8/site-packages (from pymongo) (2.5.0)

[notice] A new release of pip is available: 23.0.1 -> 24.0

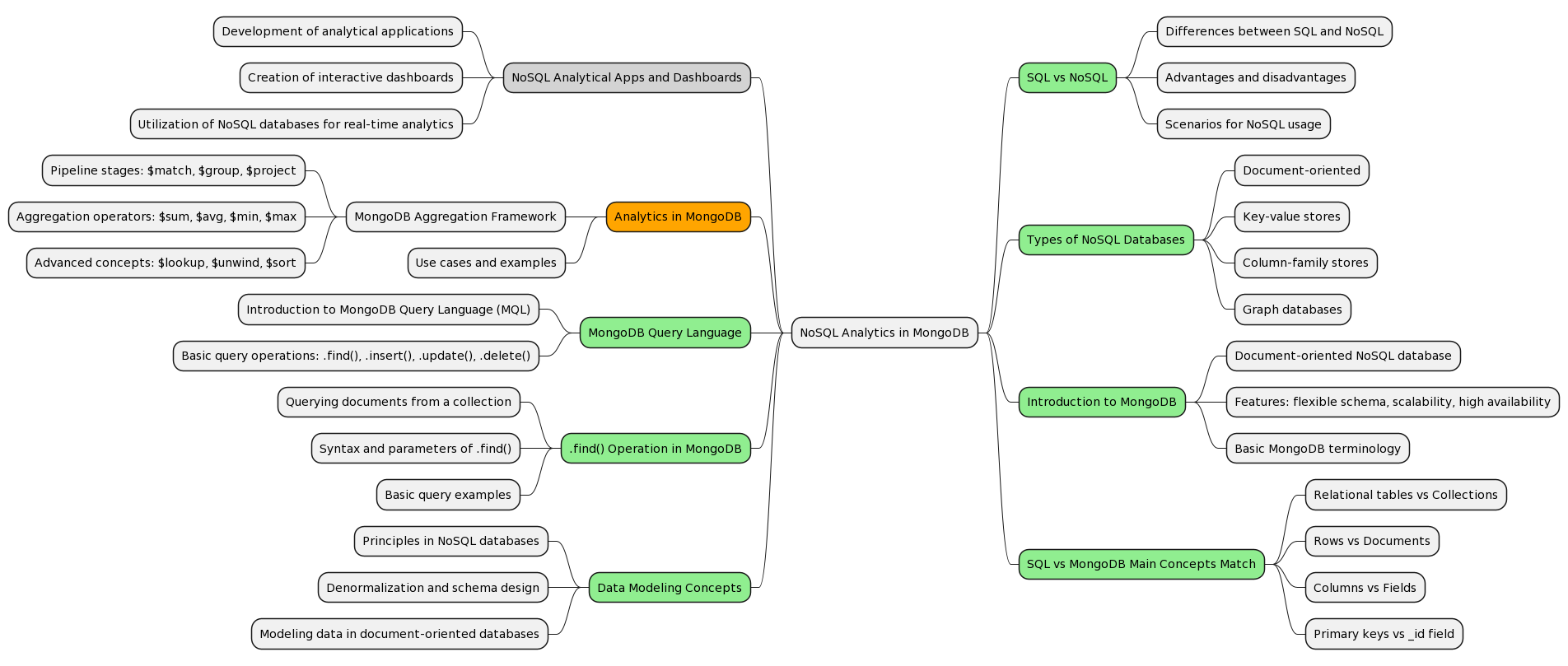

[notice] To update, run: /opt/python/3.8.17/bin/python3.8 -m pip install --upgrade pipIntroduction and Place in the Course (3 min)

Also, we would have “entry ticket” Formative task for Pre-Assessment:

- Some reminder on key sql / dplyr ideas

- Some reminder on mongodb vs sql terminology

- Something in poll everywhere/formative

Introduction and Place in the Course (2 min)

So far in the program you discussed basics of SQL databases, as well as data manipulation frameworks in R and Python

In what we have already covered:

- basic ideas behind NoSQL databases and how diverse this ecosystem is

- key concepts behind MongoDB as document-oriented NoSQL database

- main elements of simple CRUD queries in MongoDB

- PyMongo as a way to connect to MongoDB from Python

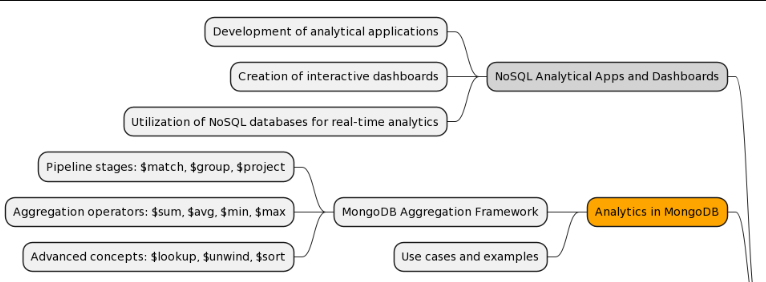

By the end of this course we will:

- Prototype an analytical app for a music streaming service using MongoDB, including aggregating user preferences and listening history data to generate meaningful statistics for two separate stakeholders.

By the end of this module we will:

- Analyze and optimize MongoDB schema designs for analytics, applying evaluation skills for effectiveness and efficiency.

- Evaluate MongoDB Aggregation Framework stages, using critical thinking to assess their contributions to data aggregation.

- Synthesize data aggregation knowledge across MongoDB, SQL, and dplyr/pandas, identifying syntactic and functional similarities and differences through creative problem-solving.

Case Study: Prototyping an Analytical App for a music streaming service (2 min)

prototype an analytical app for a music streaming service using MongoDB, including aggregating user preferences and listening history data to generate meaningful statistics for two separate stakeholders.

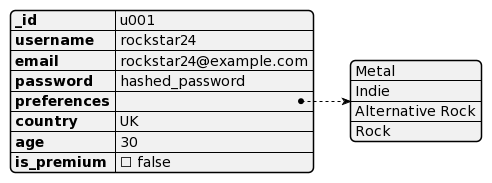

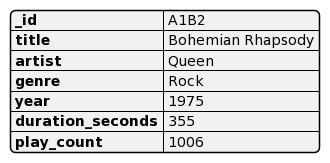

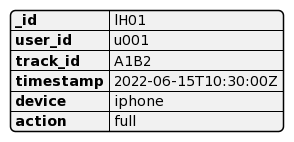

We will start from a simple database structure with three collections:

|

|

|

|

Database connection and logistics

There is already an example database for our case. As we progress with our case, you will create and expand your own copies of this database.

Connection details:

We usally take a Data Analyst perspective, which has its limitations. We need to learn how to step into our user’s shoes

- It is difficult to think as a user…

- One of the best ways to do it is to simulate realistic (but not necessarily real) interactions with user

- Prototyping user-facing app might help! And it is easy

- And we can make it connected to real data

- Open the app DemoApp

- Enter your username

- “Star” artists you like

- Press “Play” for some of their songs (this will just add a record into our DB, we are not real Spotify)

- See records updating as we go!

Here we will show how data they add to the app updates in the database straight away

- TODO render in table, show side by side

result = db.users.find().sort('_id', DESCENDING)[0:3]

for element in result:

pprint.pprint(element){'_id': ObjectId('65d65b5420ed55b382164cd4'),

'age': 30,

'country': 'CA',

'email': 'Fred@example.com',

'gender': 'Male',

'is_premium': True,

'password': 'hashed_password',

'preferences': [],

'username': 'Fred'}

{'_id': 'u015',

'age': 19,

'country': 'Canada',

'email': 'emma@example.com',

'gender': 'Female',

'is_premium': True,

'password': 'hashed_password',

'preferences': ['Hip Hop', 'Rap'],

'username': 'Emma'}

{'_id': 'u014',

'age': 21,

'country': 'UK',

'email': 'jack@example.com',

'gender': 'Male',

'is_premium': True,

'password': 'hashed_password',

'preferences': ['Indie', 'Alternative Rock'],

'username': 'Jack'}result = db.listeningHistory.find().sort('_id', DESCENDING)[0:3]

for element in result:

pprint.pprint(element){'_id': 'lH99',

'action': 'full',

'device': 'windows laptop',

'timestamp': '2023-11-07T14:05:00Z',

'track_id': 'M3N4',

'user_id': 'u007'}

{'_id': 'lH98',

'action': 'full',

'device': 'mac',

'timestamp': '2023-11-06T18:30:00Z',

'track_id': 'K1L2',

'user_id': 'u007'}

{'_id': 'lH97',

'action': 'full',

'device': 'mac',

'timestamp': '2023-11-05T16:10:00Z',

'track_id': 'I9J0',

'user_id': 'u007'}Why MongoDB fits to our case? (2 min)

prototype an analytical app for a music streaming service using MongoDB, including aggregating user preferences and listening history data to generate meaningful statistics for two separate stakeholders.

Last time we discussed some heuristics for selecting noSQL solutions. Why this problem might require MongoDB?

- source data with nested flexible structures

- need for agile analytics development with schema changes

- as a place to connect real-time streaming analytics

- sadly, you can just inherit that decision from other stakeholders

::::::

Our main tool: MongoDB aggregation framework (2 min)

Pipeline - different from simple query syntax (which we covered) and map-reduce framework (which we are not going to)

here should be some comparison between find and aggregation pipelines, shared elements like limit and order

Some benefits:

- Allows for middle complexity data processing tasks directly within MongoDB.

- Provides Advanced Data Manipulation tools for detailed data analysis.

- Can handle schema changes for evolving data structures.

- Can work as low-entry streaming analytics

Some considerations:

- No silver bullet

- Both SQL and NoSQL ecosystems are huge and the choice between SQL-based, Hadoop-based, other analytical solutions is complex

- For some SQL-oriented data tools (e.g. Tableau) there will be extra work to efficiently use it with Mongo

Introduction to the Aggregation Framework

- Aggregation Framework vs SQL aggregation or dplyr/pandas to show connections in the program, note that in the Instructional notes handout

Simple Pipeline

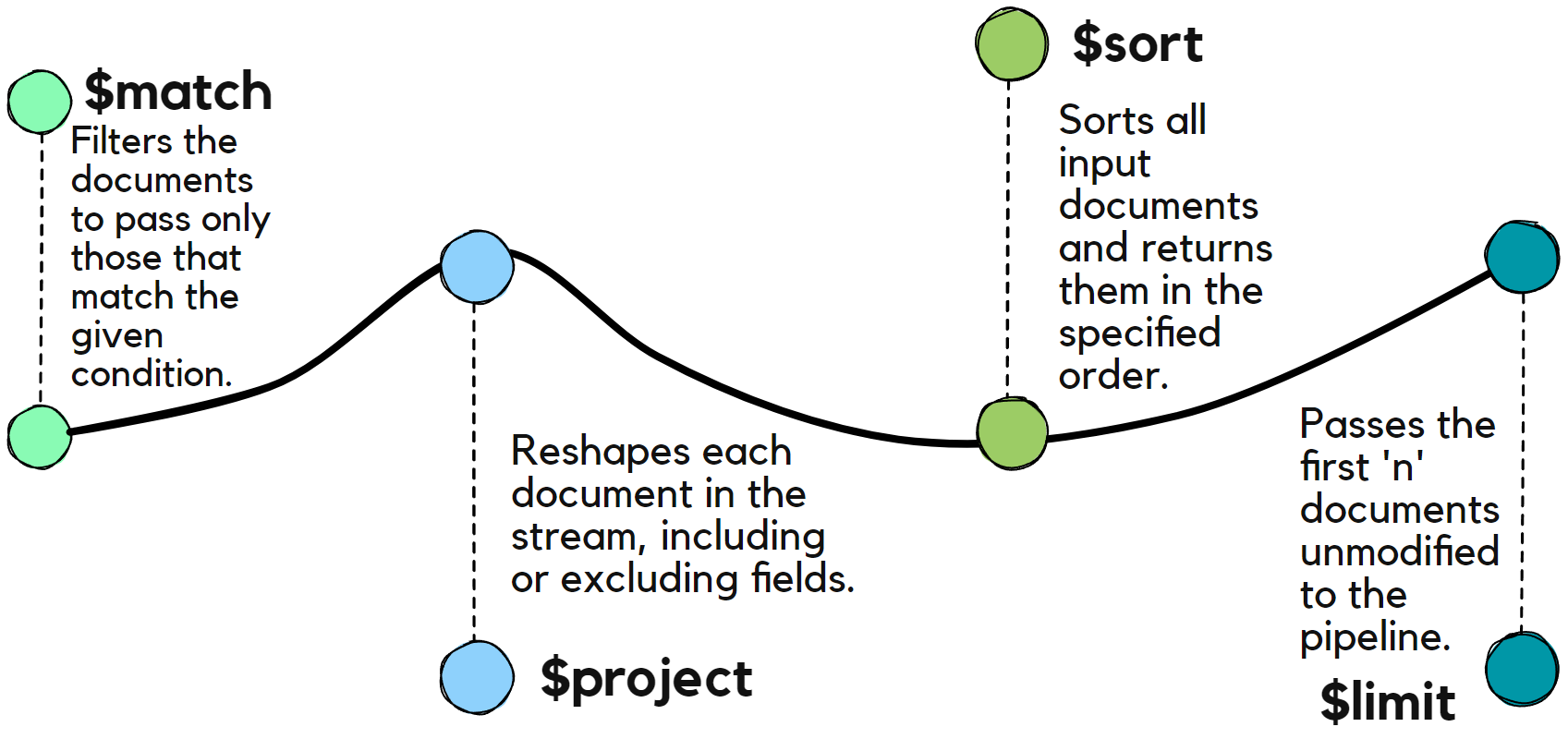

$match Stage

- Filters documents to pass only those that match the specified condition.

- Examples:

"{$match": { "country": "UK" }}"{$match": { "age": {"$lt": 25}}}

$project/$set/$unset Stage

- Reshapes documents by including, excluding, or renaming fields.

- Examples:

{"$project": {"genre": 1, "_id": 0}}

$limit Stage

- Limits the number of documents passed to the next stage.

- Example:

{"$limit": 5}

$sort Stage

- Sorts documents based on specified fields.

- Example:

{"$sort": { "age": -1 }}

Composing Simple Aggregation Pipeline (5 min including Formative)

So, now we know enough stages to compose simple pipelines. Actually, as some of you might have thought already, this is very similar to how basic .find queries work. The real power of pipelines comes with a couple of other operators but for now let us discuss how we will approach creating pipelines.

Combining stage definitions with programming language capabilities gives us powerful instruments: we can define each stage separately, and connect them to each other in different pipelines (MongoDB folks call this composability)

tracks = db.tracks

match1 = {"$match": {"year": {"$gt": 1980}}}

project1 = {"$project": {"artist": 1, "_id": 0, "genre": 1, "year": 1}}

limit3 = {"$limit": 3}

sort1 = {"$sort": {"year": -1}}

pipeline1 = [match1, project1, limit3, sort1]

pipeline2 = [match1, project1, {"$limit": 10}, sort1]

pipeline3 = [ {"$limit": 10}, match1, project1, sort1]

result = db.tracks.aggregate(pipeline1)

# for element in result:

# pprint.pprint(element)

pprint.pprint(list(result)) #NB! do not use with large result sets[{'artist': 'Oasis', 'genre': 'Rock', 'year': 1995},

{'artist': 'Michael Jackson', 'genre': 'Pop', 'year': 1983},

{'artist': 'Michael Jackson', 'genre': 'Pop', 'year': 1982}]Aggregation Pipeline

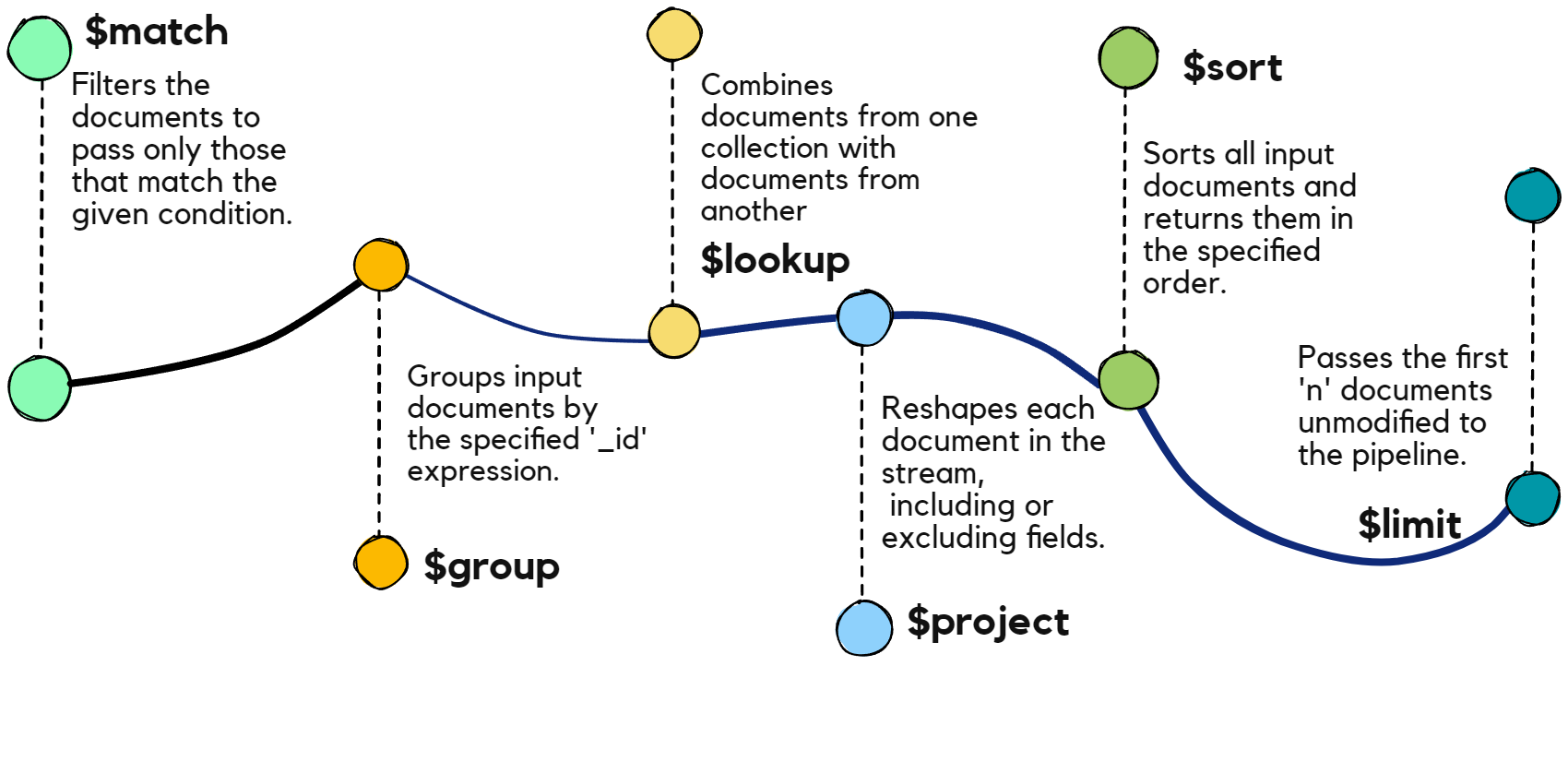

$group Stage

- Groups documents by a specified key and applies accumulator expressions, which you will get to know in more details during the lab and homework.

- Examples:

python {"$group": { "_id": "$genre", "total": { "$sum": 1 } }}#mind $genre for a field namepython {"$group": { "_id": "$genre", "count": {"$avg": "$year"}}}

- TODO: Add predictive questions as MCQ: what would we expect to happen?

- When order of operations would matter? (show

[group1, limit3, sort1], ask about[limit3, group1, sort1]) - How to debug pipelines step by step

group1 = {"$group": {"_id": "$genre",

"ntracks": {"$sum": 1}}}

limit3 = {"$limit": 3}

sort1 = {"$sort": {"ntracks": -1}}

pipeline1 = [group1, limit3, sort1]

pipeline2 = [limit3, group1, sort1]

result = db.tracks.aggregate(pipeline1)

# for element in result:

# pprint.pprint(element)

pprint.pprint(list(result)) #NB! do not use with large result sets

print("\n")

result = db.tracks.aggregate(pipeline2)

pprint.pprint(list(result))[{'_id': 'Hip Hop', 'ntracks': 4},

{'_id': 'Folk', 'ntracks': 1},

{'_id': 'Alternative Rock', 'ntracks': 1}]

[{'_id': 'Rock', 'ntracks': 2}, {'_id': 'Folk Rock', 'ntracks': 1}]- TODO https://www.mongodb.com/docs/manual/core/aggregation-pipeline-optimization/

- TODO show manipulation of pipeline stages, reuse

- TODO link and show how to use https://www.mongodb.com/docs/manual/reference/operator/aggregation/

Summary and Recap:

- Recap stages of the pipeline

- Does order matter?

- Can we repeat stages?

Questions

- What are differences and similarities between MongoDB aggregation framework and SQL/Dplyr/Datatable?

- Where you can apply the framework in your own projects?

Last of the crucial pipeline stages is $lookup

$lookup

{

"$lookup":

{

"from": "users",

"localField": "user_id",

"foreignField": "_id",

"as": "user_details"

}

}Example: Let’s get all

lookup_tracks = {

'$lookup': {

'from': 'tracks',

'localField': 'track_title',

'foreignField': 'title',

'as': 'track_details'

}

}

match_lt1980 = {

'$match': {

'track_details.year': {"$lt": 1980}

}

}

group_playcount = {"$group":{"_id": "$track_details.genre", "playcount" : {"$sum": 1}}}

sort_playcount = {"$sort": {"playcount": -1}}

pipeline = [lookup_tracks, match_lt1980, group_playcount, sort_playcount, limit3]

# Execute the aggregation

results = db.listeningHistory.aggregate(pipeline)

for result in results:

print(result)How to make the query more efficient? - Start from tracks, $match first?

Hands-On Practice with out Case

What we can do with this data to help our users enjoy the app?

Example (live coding): Playlists for millenials

- Select genres with tracks after 1980 played more than 100 times

- Start with defining which stages we need and plain text descriptions of what we expect on each!

- Define order

- Define if we need any stages more than once

#match:

#group:

#sort:

#limit:

# result = db.tracks.aggregate([

# ...

# ])

#

# for element in result:

# pprint.pprint(element)result = db.tracks.aggregate([

{ "$match": { "year": { "$gt": 1980 } } }, # Match tracks released after 1980

{ "$group": { "_id": "$genre", "totalPlayCount": { "$sum": "$play_count" } } }, # Aggregate total play count by genre

{ "$match": { "totalPlayCount": { "$gte": 100 } } } # Match genres with total play count greater than or equal to 100

])

for element in result:

pprint.pprint(element){'_id': 'Hip Hop', 'totalPlayCount': 4808}

{'_id': 'Alternative Rock', 'totalPlayCount': 907}

{'_id': 'Rock', 'totalPlayCount': 1810}

{'_id': 'Pop', 'totalPlayCount': 13412}::::::

MongoDB pipelines checklist

- Define stages and what we expect on the inputs and outputs (and write it down!)

- What order we want them to be in and why?

- Do we need to use some stages more than once?

- In your code, first define every stage and assign to a separate variable

- Define your pipeline

- Improve and test it iteratively

Two personas

:::::: {.cell .markdown}

Now let’s generate some ideas of useful features we can prototype for our users!

Design it in a way which includes non-coding activity in the room

- We look at the formative answers and select one idea

- We ask students to describe how would they approach that according to pipeline checklist.

- If there is coding in classroom, they can test their ideas

- If no, they go with checklist after brief discussion to the lab/homework

Checklist for formative:

- Define stages and what we expect on the inputs and outputs (and write it down!)

- What order we want them to be in and why?

- Do we need to use some stages more than once?

- In your code, first define every stage and assign to a separate variable

- Define your pipeline

- Improve and test it iteratively

What we learned today and where are we going next

Homework

Task 1: Find the top 5 most listened-to tracks overall

- Start with defining which stages we need and plain text descriptions of what we expect on each!

- Define order

- Define if we need any stages more than once

#match:

#group:

#sort:

#limit:

# result = db.listeningHistory.aggregate([

# ...

# ])

#

# for element in result:

# pprint.pprint(element)