Integrative AB-Testing Experience: Course Modules and Simulators

These are notes on designing modules and course outline for an intermediate to advanced digital experiments / AB testing course. They reflect the iterative nature of development, tested in many courses and settings. The critical learning design problem is considering the complex skill nature of digital field experiments and AB tests, requiring many skills going beyond traditional experimental design courses.

Learning science theory suggests that to reduce compartmentalization and ensure complex skill formation, we can use authentic tasks in contexts of learning design frameworks such as 4C/ID (see also lesson redesign example for a more extended discussion of this approach) and engage active learning.

During multiple iterations, I worked on cases in that direction as modules and tasks in different courses at UofT and HSE University, as well as workshops.

In addition to tasks and learning materials, I also outline topics that can serve as a foundation of a course following (or integrated with) experimental design course for data science, analytics, and information engineering.

Course Outline

- Practical Issues in Planning and Analyzing Experiments: Simulation-based power analysis for complex designs, cost-based power analysis, common issues in online experiments

- Experimental designs, adaptive experiments and bridging offline and online learning: Multiarmed vs. factorial designs and power/precision considerations. 2^k case. Improving power with baseline covariates. CUPED. Sequential experiments. Bayesian AB testing. Multiarmed bandits, contextual bandits. Bridging online and offline learning with rich contexts for bandits learned from data.

- Behavioral Decision Making and Visualization: Representing experimental data in the presence of biases. Data visualization and effect size communication strategies. Cost-benefit analysis. Bayesian decision theory framework for experiments. Decision-making scenario simulations.

- Experimentation culture and decision-making. Planning experimental program as an optimization problem. Multiphase optimization strategy. Knowledge management in organizations. Meta-analysis. Post-hoc analysis in planning of experimental programs.

Sample Reading List

- I. Bojinov and S. Gupta, “Online Experimentation: Benefits, Operational and Methodological Challenges, and Scaling Guide,” Harvard Data Science Review, vol. 4, no. 3, Jul. 2022, doi: 10.1162/99608f92.a579756e.

- R. Kohavi, A. Deng, and L. Vermeer, “A/B Testing Intuition Busters: Common Misunderstandings in Online Controlled Experiments,” in Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Washington DC USA: ACM, Aug. 2022, pp. 3168–3177. doi: 10.1145/3534678.3539160.

- D. Lakens, Improving Your Statistical Inferences. Zenodo, 2022. doi: 10.5281/ZENODO.6409077.

- L. Page, Optimally irrational: the good reasons we behave the way we do. Cambridge, United Kingdom New York, NY Port Melbourne New Delhi Singapore: Cambridge University Press, 2023.

- J. Ruscio and T. Mullen, “Confidence Intervals for the Probability of Superiority Effect Size Measure and the Area Under a Receiver Operating Characteristic Curve,” Multivariate Behavioral Research, vol. 47, no. 2, pp. 201–223, Mar. 2012, doi: 10.1080/00273171.2012.658329.

- J. Ruscio, “A probability-based measure of effect size: Robustness to base rates and other factors.,” Psychological Methods, vol. 13, no. 1, pp. 19–30, 2008, doi: 10.1037/1082-989X.13.1.19.

- S. Zhang, P. R. Heck, M. N. Meyer, C. F. Chabris, D. G. Goldstein, and J. M. Hofman, “An illusion of predictability in scientific results: Even experts confuse inferential uncertainty and outcome variability,” Proc. Natl. Acad. Sci. U.S.A., vol. 120, no. 33, p. e2302491120, Aug. 2023, doi: 10.1073/pnas.2302491120.

- J. M. Hofman, D. G. Goldstein, and J. Hullman, “How Visualizing Inferential Uncertainty Can Mislead Readers About Treatment Effects in Scientific Results,” in Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu HI USA: ACM, Apr. 2020, pp. 1–12. doi: 10.1145/3313831.3376454.

- D. J. Hand, “On Comparing Two Treatments,” The American Statistician, vol. 46, no. 3, pp. 190–192, Aug. 1992, doi: 10.1080/00031305.1992.10475881.

- L. M. Collins, Optimization of Behavioral, Biobehavioral, and Biomedical Interventions: The Multiphase Optimization Strategy (MOST). in Statistics for Social and Behavioral Sciences. Cham: Springer International Publishing, 2018. doi: 10.1007/978-3-319-72206-1.

- A. Kale, S. Lee, T. Goan, E. Tipton, and J. Hullman, “MetaExplorer : Facilitating Reasoning with Epistemic Uncertainty in Meta-analysis,” in Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, in CHI ’23. New York, NY, USA: Association for Computing Machinery, Apr. 2023, pp. 1–14. doi: 10.1145/3544548.3580869.

- M. A. Kraft, “The Effect-Size Benchmark That Matters Most: Education Interventions Often Fail,” Educational Researcher, vol. 52, no. 3, pp. 183–187, Apr. 2023, doi: 10.3102/0013189X231155154.

Examples of some developed materials

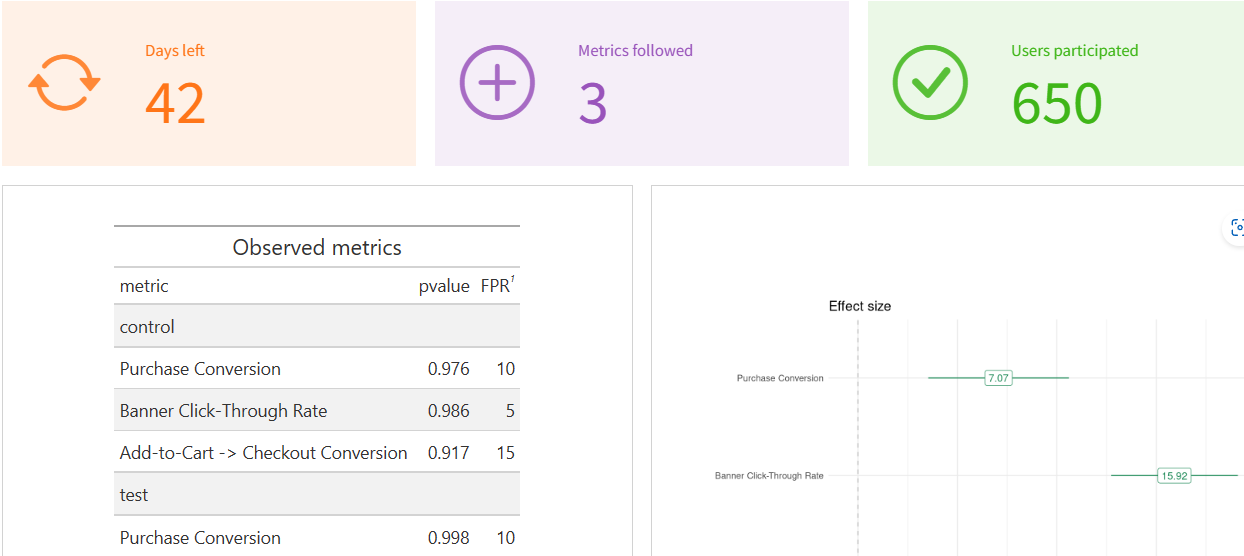

- Sample case, reproducing industrial experimental framework and a situation to make decision or diagnose an experiment. Different complexity task classes:

- make a decision based on available information,

- decide if enough information is provided,

- diagnose potential problems,

- suggest additional metrics and analysis that need to be uncovered to solve the case

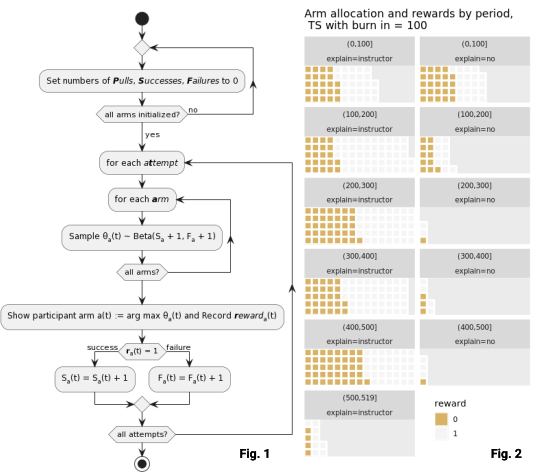

- Visualization and algorithm outline for a simple multiarmed bandit experiment, providing visual intuition on how bandit algorithm works in dynamics on a particular case

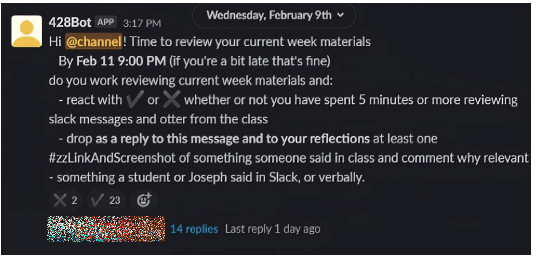

- Example of a potential group nudge in a course Slack, produced by a system allowing course students to co-design, discuss, and evaluate interventions, increasing engagement with an intervention design stage, rarely covered in university courses

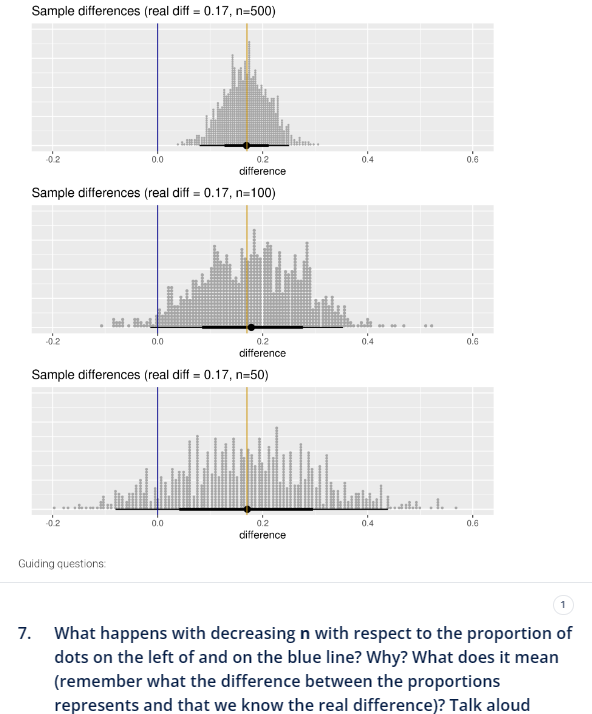

- A fragment of a learning module, reviewing and consolidating student intuition about decision-making based on hypothesis tests (simulation-based examples), developed for CSC2558

Relevant work, reporting on some parts of this project

- I. Musabirov, “Challenges and Opportunities of Infrastructure-Enabled Experimental Research in Computer Science Education,” in Proceedings of the 54th ACM Technical Symposium on Computer Science Education V. 2, in SIGCSE 2023. New York, NY, USA: Association for Computing Machinery, 2023, p. 1265. doi: 10.1145/3545947.3573242.

- Ilya Musabirov, Angela Zavaleta Bernuy, Michael Luit, Joseph Williams. A Case Study in Opportunities for Adaptive Experiments to Enable Rapid Continuous Improvement. Poster at 54th ACM Technical Symposium on Computer Science Education

- Using A/B Testing as a Pedagogical Tool for Iterative Design in HCI Classrooms Mohi Reza, Ilya Musabirov, Nathan Laundry et al. EduCHI 2023: 5th Annual Symposium on HCI Education

- Joseph Jay Williams, Nathan Laundry, Ilya Musabirov, Angela Zavaleta Bernuy, and Michael Liut. 2023. Designing, Deploying, and Analyzing Adaptive Educational Field Experiments. Workshop at SIGCSE TS ’23